We are a research lab focused on investigating probabilistic models and programs that are reliable and efficient. We are based at the School of Informatics, University of Edinburgh within the Institute for Adaptive and Neural Computations (ANC).

- [18th Dec 2025]

- [14th Nov 2025]

- [1st May 2025]

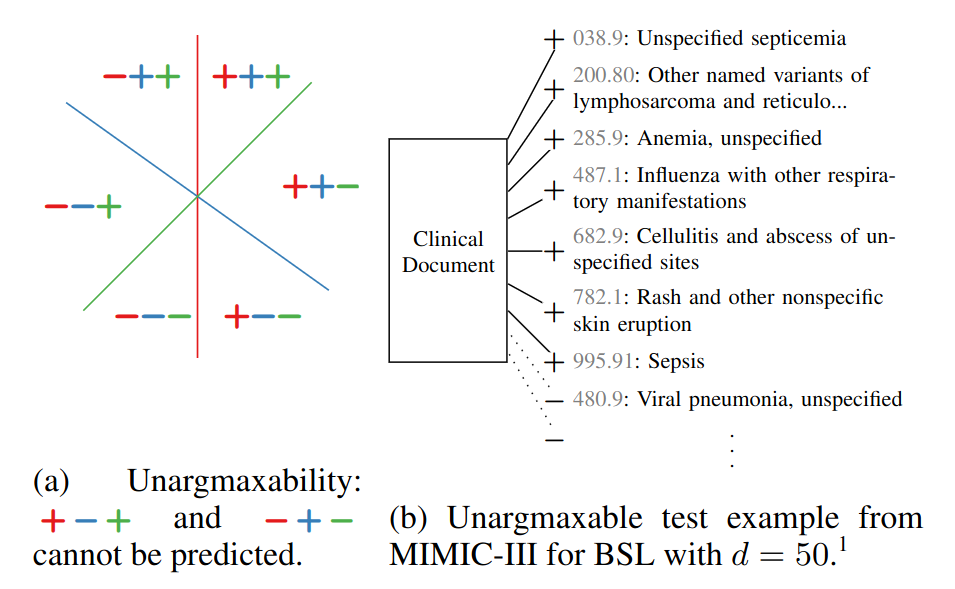

Our paper on highlighting how complex query answering benchmarks are far from complex is accepted at ICML 2025 as a

spotlight (top 2.6%) ! - [1st Apr 2025]

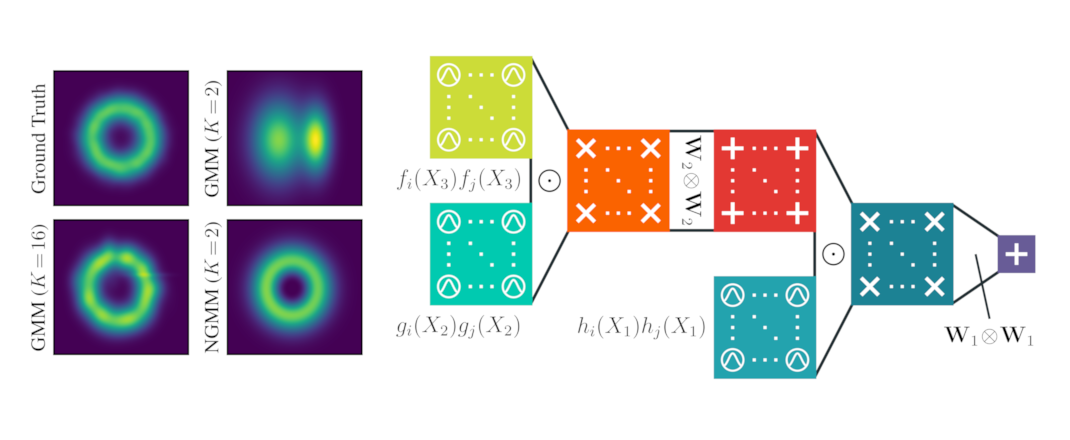

AV will teach a full-day tutorial on “Neuro-symbolic integration” at the Neuro-explicit RTG retreat in Saarbrueck and a lecture on subtractive mixture models at the GeMSS 2025 summer school.

- [5th Mar 2025]

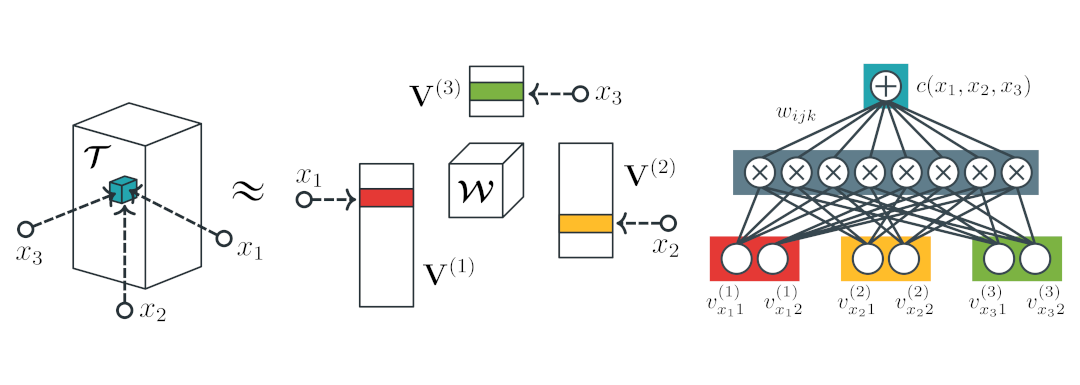

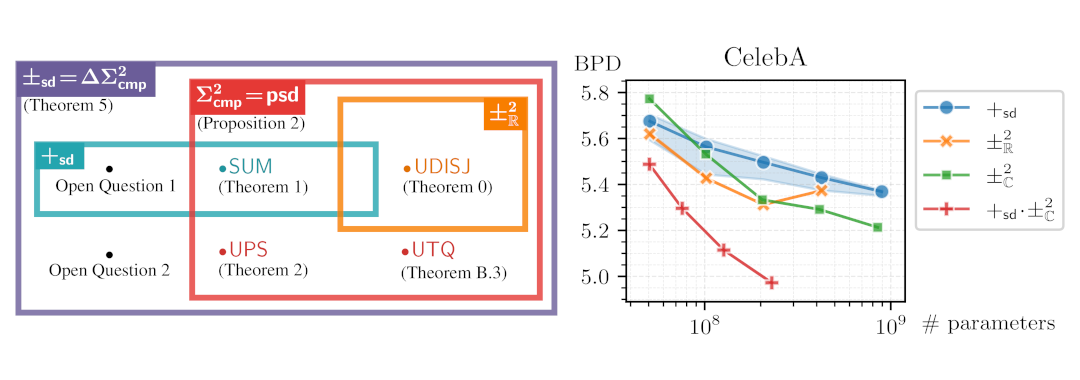

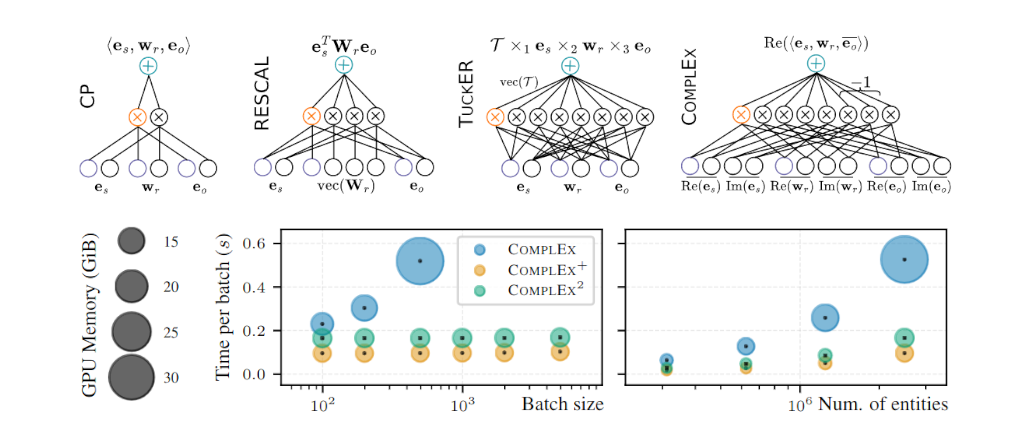

The CoLoRAI workshop and tutorial on connecting tensor factorizations and circuits at AAAI-25 went extremely well!